Introduction

TensorFlow, an open-source machine learning framework developed by Google, has become one of the most widely used platforms for developing and training machine learning (ML) models. With its comprehensive ecosystem of tools and libraries, TensorFlow enables developers and researchers to build, train, and deploy ML models efficiently. TensorFlow is increasingly becoming a topic covered in most Data Scientist Classes. This article explores the key aspects of developing and training ML models using TensorFlow, providing an overview of its features, workflow, and practical examples.

What is TensorFlow?

TensorFlow is a versatile ML framework designed to facilitate the creation of deep learning models. It provides a flexible and scalable platform for building and deploying ML applications, supporting a wide range of tasks from simple linear regression to complex neural networks. TensorFlow is known for its efficient computation capabilities, enabling execution on CPUs, GPUs, and TPUs (Tensor Processing Units). TensorFlow is often covered in an advanced Data Science Course in Bangalore, Pune, Delhi, and such cities where there is a demand among researchers, scientists, and business developers to learn advanced machine learning modelling technologies.

Key Features of TensorFlow

Comprehensive Ecosystem

TensorFlow offers a rich set of tools and libraries, including TensorFlow Hub, TensorFlow Lite, and TensorFlow Extended (TFX). Data Scientist Classes for data science professionals and researchers will train learners on how to use these tools in various stages of the ML pipeline, from model building to deployment.

Scalability

TensorFlow’s architecture is designed to scale seamlessly from a single device to large clusters of machines, making it suitable for both research and production environments.

Keras API

TensorFlow integrates with Keras, a high-level API that simplifies the process of building and training ML models. Keras provides an intuitive interface for defining and training neural networks, making TensorFlow accessible to beginners and experts alike.

Eager Execution

Eager execution in TensorFlow allows for immediate evaluation of operations, enabling a more interactive and flexible programming experience. This feature is particularly useful for debugging and prototyping.

TensorBoard

TensorBoard is TensorFlow’s visualisation toolkit, providing tools for monitoring and debugging ML models. It offers visualisations for metrics, model graphs, and data, helping developers gain insights into their training processes.

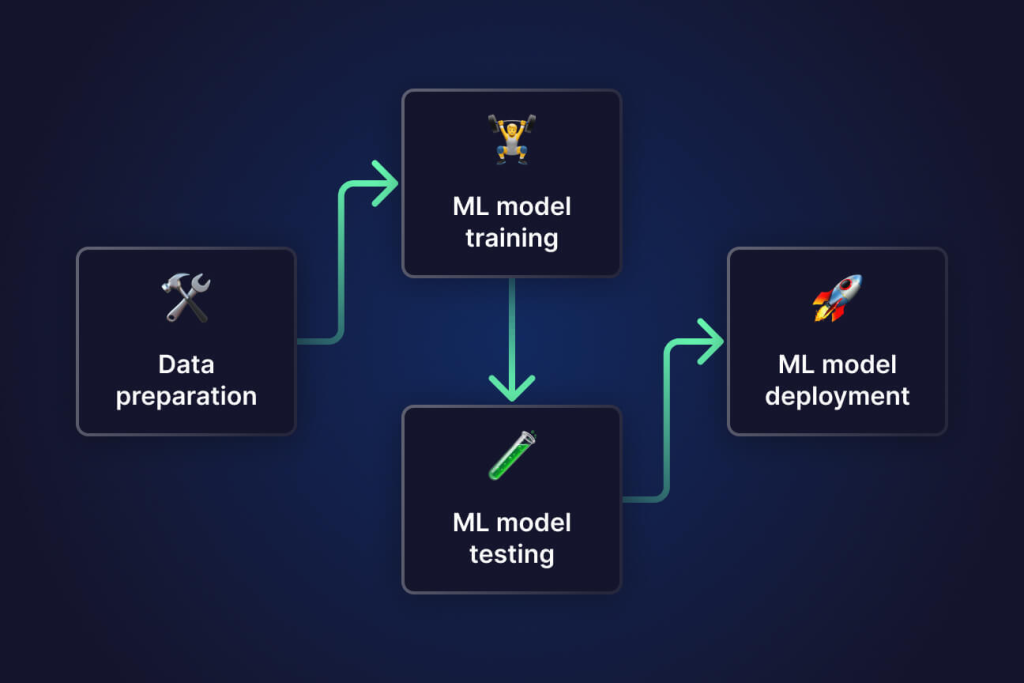

Workflow for Developing and Training ML Models

1. Setting Up the Environment

To get started with TensorFlow, you need to install it via pip:

pip install tensorflow

Once installed, you can import TensorFlow in your Python script:

import tensorflow as tf

from tensorflow.keras import layers, models

2. Preparing the Data

Data preparation is a crucial step in developing ML models. TensorFlow provides several utilities for loading and preprocessing data. For example, you can use tf.data to create efficient input pipelines:

# Load and preprocess the data

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data()

train_images = train_images / 255.0

test_images = test_images / 255.0

train_dataset = tf.data.Dataset.from_tensor_slices((train_images, train_labels)).batch(32)

test_dataset = tf.data.Dataset.from_tensor_slices((test_images, test_labels)).batch(32)

3. Building the Model

Building a model in TensorFlow is straightforward with the Keras API. Here is an example of creating a simple convolutional neural network (CNN) for image classification:

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation=’relu’, input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.Flatten(),

layers.Dense(64, activation=’relu’),

layers.Dense(10, activation=’softmax’)

])

model.summary()

4. Compiling the Model

Before training, you need to compile the model, specifying the optimizer, loss function, and metrics:

model.compile(optimizer=’adam’,

loss=’sparse_categorical_crossentropy’,

metrics=[‘accuracy’])

5. Training the Model

Training the model involves feeding the data to the model and specifying the number of epochs:

history = model.fit(train_dataset, epochs=10, validation_data=test_dataset)

6. Evaluating the Model

After training, you can evaluate the model’s performance on the test data:

test_loss, test_acc = model.evaluate(test_dataset, verbose=2)

print(f’\nTest accuracy: {test_acc}’)

7. Saving and Loading the Model

TensorFlow allows you to save and load models easily, facilitating model reuse and deployment:

# Save the model

model.save(‘my_model.h5’)

# Load the model

new_model = tf.keras.models.load_model(‘my_model.h5’)

Practical Example: Image Classification with MNIST

Here is a complete example of developing and training an ML model using TensorFlow for the MNIST dataset. A career-oriented Data Science Course in Bangalore and such cities will provide intense training on developing and training such ML models using TensorFlow as such courses are generally intended for practitioners and research scientists.

import tensorflow as tf

from tensorflow.keras import layers, models

# Load and preprocess the data

(train_images, train_labels), (test_images, test_labels) = tf.keras.datasets.mnist.load_data()

train_images = train_images[…, tf.newaxis] / 255.0

test_images = test_images[…, tf.newaxis] / 255.0

train_dataset = tf.data.Dataset.from_tensor_slices((train_images, train_labels)).batch(32)

test_dataset = tf.data.Dataset.from_tensor_slices((test_images, test_labels)).batch(32)

# Build the model

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation=’relu’, input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation=’relu’),

layers.Flatten(),

layers.Dense(64, activation=’relu’),

layers.Dense(10, activation=’softmax’)

])

# Compile the model

model.compile(optimizer=’adam’,

loss=’sparse_categorical_crossentropy’,

metrics=[‘accuracy’])

# Train the model

history = model.fit(train_dataset, epochs=10, validation_data=test_dataset)

# Evaluate the model

test_loss, test_acc = model.evaluate(test_dataset, verbose=2)

print(f’\nTest accuracy: {test_acc}’)

# Save the model

model.save(‘mnist_model.h5’)

Conclusion

TensorFlow is a powerful and flexible framework for developing and training ML models. Its comprehensive ecosystem, scalability, and ease of use make it an excellent choice for both beginners and experienced practitioners. By following the workflow outlined in this article, you can harness the capabilities of TensorFlow to build and deploy efficient ML models for a wide range of applications. Whether you are working on research or real-world projects, TensorFlow provides the tools and resources needed to succeed in the rapidly evolving field of machine learning. These emerging tools are covered in most Data Scientist Classes because of their applicability across various business domains where advanced data science technologies are increasingly being employed.

For More details visit us:

Name: ExcelR – Data Science, Generative AI, Artificial Intelligence Course in Bangalore

Address: Unit No. T-2 4th Floor, Raja Ikon Sy, No.89/1 Munnekolala, Village, Marathahalli – Sarjapur Outer Ring Rd, above Yes Bank, Marathahalli, Bengaluru, Karnataka 560037

Phone: 087929 28623

Email: enquiry@excelr.com